3. Randomness and Random Walks#

3.1. Deterministic Randomness#

Some people are attracted to computing because of its deterministic nature. Nevertheless, many computer cycles are used for Monte Carlo calculations.

Monte Carlo calculations include elements of chance at their very core (hence the name). They involve random-like numbers generated by a computer to simulate naturally-random processes, such as thermal motion or radioactive decay.

Monte Carlo techniques are able to solve very challenging problems!

3.2. Random Sequences#

We define a sequence \(r_1, r_2,...\) as random if there are no correlations between the coefficients.

But: being random does not mean all numbers in the sequence are equally likely to occur!

If all numbers in a sequence are equally likely to occur: the sequence is called uniform.

Mathematically: the likelihood of a number occurring is described by a distribution \(P(r)\), where \(P(r)\mathrm{d}r\) is the probability of finding \(r\) in the interval \([r, r+\mathrm{d}r]\).

For a uniform distribution: \(P(r) = \mathrm{constant}\).

The standard random number generator on computers generates uniform distributions between 0 and 1, i.e. it outputs numbers in this interval with equal probability, yet independent from the previous number.

By their nature: computers are deterministic devices, and therefore cannot create a random sequence. Computed random number sequences must contain correlations, and therefore cannot be truly random. This implies that if we know \(r_m\) and its preceding elements, then it is always possible to figure out \(r_{m+1}\). For this reason, computers are said to generate pseudorandom numbers.

A primitive alternative to this is to read off a table of truly random numbers, determined by naturally-random process, such as radioactive decay, or to connect the computer to an experimental device that measures random events.

3.2.1. Random Number Generation#

Sequences of pseudorandom numbers can be generated on a computer via the “linear congruent” or “power residue” method:

To generate a pseudorandom sequence of numbers \(0 \leq r_i \leq M-1\) over the interval \([0, M-1]\), starting with \(r_1\), to obtain the next random number \(r_2\), multiply \(r_1\) by a constant \(a\), add another constant \(c\) and keep the remainder. Repeat this with \(r_2\) to get \(r_3\) and so on. So to get \(r_{i+1}\), given \(r_i\):

\(r_{i+1} = \mathrm{remainder} \left( \frac{a r_i + c}{M} \right)\)

The initial number, the seed, \(r_1\) is frequently supplied by the user.

In Python the remainder is given by the operator “%”, i.e. the above equation would read: rnext = (a * rprevious + c)%M.

One can then divide the whole sequence by \(M\) to get numbers in the interval \([0,1]\).

If we want random numbers in an interval \([A,B]\) instead, then we can transform the generated sequence in the interval \([0,1]\), by:

\(x_i = A + (B-A) r_i\)

Note that the linear congruent method becomes completely correlated if a particular interger comes up a second time. The whole cycle then repeats.

If e.g. 48-bit arithmetic is used, then \(M \sim 2^{48} \simeq 3\times 10^{14}\). If your program would then use approximately these many random numbers (feasible, e.g. if your program is performing Monte Carlo simulations in many dimensions!), then you may need to “re-seed” (i.e. choose a new seed) to reset the sequence during intermediate steps.

In Python, the default random.random() algorithm is the “Mersernne Twister”. It has an extremely long period: \(2^{19937} -1\) (a prime number) which is approximately \(4.3 \times 10^{6001}\). This is many orders of magnitude larger than the estimated number of particles in the observable universe (\(\sim 10^{87}\)).

See Wikipedia entry for more details, https://en.wikipedia.org/wiki/Mersenne_Twister, as well as original paper: https://dl.acm.org/doi/pdf/10.1145/272991.272995.

3.2.2. Generating Random Numbers of Arbitrary Distributions#

Supose we possess a random number generator that yields uniform random numbers in the interval \([0,1]\). We can use it to generate random numbers according to any distribution using the inversion method.

The method proceeds as follows:

Suppose we want to distribute random numbers according to a function \(f(x)\). We first calculate the cumulative distribution function (CDF) as: \(F(x) = \int^x_0 \mathrm{d}x f(x)\).

We then find the inverse of the desired CDF, i.e. we solve \(y=F(x)\) as \(F^{-1}(y)=x\).

If then “draw” \(y_0\) and calculate \(x_0 = F^{-1}(y_0)\), then \(x_0\) is distributed according to \(f(x)\).

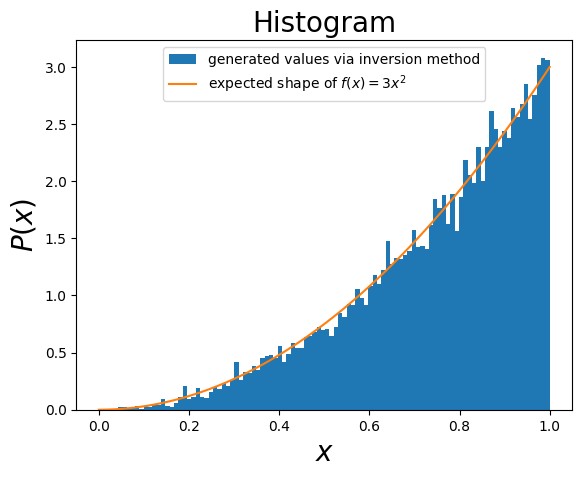

Here’s an example for \(f(x)=3x^2\) for \(x \in [0,1]\). Note that \(\int_0^1 \mathrm{d} x f(x)= 1\) for \(f(x)\) in \([0,1]\), as expected for a probability distribution.

The CDF is \(F(x) = x^3\). Then \(x = y^{1/3}\) and so we have:

import random

import math

import matplotlib.pyplot as plt # import matplotlib

import numpy as np

x = []

# We have x = (y)**(1/3) and we pick y in [0,1]

for i in range(10000): # generate 1000 points

y = random.random() # generate random numbers in [0,1]

x.append( (y)**(1/3) )

# create the real distribution for comparison. Use numpy"

xcomp = np.linspace(0,1,100)

ycomp = 3*np.square(xcomp)

# create a histogram of x:

fig, ax = plt.subplots() # create the elements required for matplotlib. This creates a figure containing a single axes.

# set the labels and titles:

ax.set_xlabel(r'$x$', fontsize=20) # set the x label

ax.set_ylabel(r'$P(x)$', fontsize=20) # set the y label. Note that the 'r' is necessary to remove the need for double slashes. You can use LaTeX!

ax.set_title('Histogram', fontsize=20) # set the title

# make a histogram of the values, density=True normalizes the histogram

ax.hist(x, label='generated values via inversion method', density=True, bins=100)

# compare to how we expect it to look like:

ax.plot(xcomp, ycomp, label='expected shape of $f(x) = 3x^2$')

# construct the legend:

ax.legend(loc='upper center') # Add a legend

plt.show() # show the plot here

This method predicates on the existence of an analytical expression for the CDF and its inverse, and therefore is not always useful! (see later)

3.3. Random Walks#

Consider a pizza brought into this room. The molecules from its smell collide randomly with other molecules in the air and eventually reach your nose.

The problem is to determine how many collisions, on average, a scent molecule makes in traveling a distance \(R\), given that it travels an average (root-mean-square) distance \(r_\mathrm{rms}\) between collisions.

3.3.1. Random Walks: Theoretical Description#

A “walker” takes sequential steps with the direction of each step independent of the direction of the previous step.

Let’s consider a two-dimensional model. Start at the origin and take \(N\) steps in the \(xy\)-plane of lengths:

\((\Delta x_1, \Delta y_1), (\Delta x_2, \Delta y_2), ..., (\Delta x_N, \Delta y_N)\)

then, we have:

\(R^2 = (\Delta x_1 + \Delta x_2 + ... + \Delta x_N)^2 + (\Delta y_1 + \Delta y_2 + ... + \Delta y_N)^2\).

\(\Rightarrow R^2 = \Delta x_1^2 + \Delta x_2^2 + \Delta x_N^2 + 2 \Delta x_1 \Delta x_2 + 2 \Delta x_1 \Delta x_3 + ... + (x \rightarrow y)\).

If a walk is random, then the walker is equally likely to travel in any direction at each step. If we take the average of \(R^2\) over a large number of such random steops, we expect that all cross terms would vanish:

\(\left< R^2 \right> = \left< (\Delta x_1^2 + \Delta y_1^2) + (\Delta x_2^2 + \Delta y_1^2) + .. + (\Delta x_N^2 + \Delta y_N^2) \right> = N \left<r^2\right> = N r_\mathrm{rms}^2\)

where \(r_\mathrm{rms} = \sqrt{\left< r^2 \right>}\).

So:

\(R_\mathrm{rms} = \sqrt{\left< R^2 \right>} = \sqrt{N} r_\mathrm{rms}\).

Let’s investigate this theoretical description through a simulation!

Before we do so, let’s introduce the concept of classes in Python (object-oriented programming), and use it in our example.

3.4. Digression: Object-Oriented Programming in Python#

Classes provide a means of bundling data and functionality together. Creating a new class creates a new type of object, allowing new instances of that type to be made.

The simplest form of a class definition looks like:

class ClassName:

<statement-1>

.

.

.

<statement-N>

You can read all about classes and object-oriented programming at: https://docs.python.org/3/tutorial/classes.html. Here we are going to proceed by example.

Suppose we wish to create a new object that has the properties we expect from complex numbers. We want this object to have a real and imaginary part, and we want to easily access those, as well as perform various operations on it: e.g. get its modulus, complex conjugate or argument.

We can begin by defining a class as follows:

# define a new class called Complex with real and imaginary parts (.r and .i):

class Complex:

"""A simple complex number class"""

def __init__(self, realpart, imagpart):

self.r = realpart

self.i = imagpart

print("I have created a new complex variable with r,i=", self.r, self.i)

The __init__() method is automatically invoked when you create an instance of this class:

# Create an "instance" of the Complex class:

x = Complex(3.0, 4.5) # the real part is 3.0 and the imaginary part is 4.5

print('real part=', x.r)

print('imaginary part=', x.i)

y = Complex(1.0, -1.5)

print(y.r, y.i)

I have created a new complex variable with r,i= 3.0 4.5

real part= 3.0

imaginary part= 4.5

I have created a new complex variable with r,i= 1.0 -1.5

1.0 -1.5

We can also add “attribute references”, i.e. functions that operate on instances of this function:

import math

# define the Complex class, with mod(), arg() and cc() functions to return:

# modulus, argument and complex conjugate

# see https://en.wikipedia.org/wiki/Complex_number for details

class Complex:

"""A more advanced complex number class"""

def __init__(self, realpart, imagpart):

self.r = realpart

self.i = imagpart

# the modulus:

def mod(self):

return math.sqrt(self.r**2 + self.i**2)

# the argument

def arg(self):

if self.i!=0 and self.r>0:

arg = 2 * math.atan(self.i/(self.r+self.mod()))

elif self.r<0 and self.i==0:

arg = math.pi

elif self.r==0 and self.i==0:

arg = float('nan')

return arg

# the complex conjugate, return a Complex object with - the imaginary part

def cc(self):

return Complex(self.r, -self.i)

# Create an "instance" of the updated Complex class:

x =Complex(3.0, 4.5) # the real part is 3.0 and the imaginary part is 4.5

print('real part=', x.r)

print('imaginary part=', x.i)

real part= 3.0

imaginary part= 4.5

# get the modulus:

x.mod()

5.408326913195984

# get the argument:

print(x.arg())

0.9827937232473289

# get the complex conjugate, another instance of the Complex class:

y = x.cc()

print(y.r)

print(y.i)

3.0

-4.5

You can also define functions that act on two instantiations of the class. E.g. let’s say we want to be able to multiply two complex numbers together correctly.

import math

# define the Complex class, with mod(), arg() and cc() functions to return:

# modulus, argument and complex conjugate

# see https://en.wikipedia.org/wiki/Complex_number for details

class Complex:

"""A more advanced complex number class"""

def __init__(self, realpart, imagpart):

self.r = realpart

self.i = imagpart

# the modulus:

def mod(self):

return math.sqrt(self.r**2 + self.i**2)

# the argument

def arg(self):

if self.i!=0 and self.r>0:

arg = 2 * math.atan(self.i/(self.r+self.mod()))

elif self.r<0 and self.i==0:

arg = math.pi

elif self.r==0 and self.i==0:

arg = float('nan')

return arg

# the complex conjugate, return a Complex object with - the imaginary part

def cc(self):

return Complex(self.r, -self.i)

# multiply two complex numbers together:

def multiply(self, other):

return Complex(self.r * other.r - self.i * other.i, self.r * other.i + self.i * other.r)

Let’s try it out!

# define two complex numbers:

x = Complex(3.0, 4.5)

z = Complex(1.0, 2.0)

# multiply them to get another complex number

h = x.multiply(z)

print(h.r)

print(h.i)

-6.0

10.5

(Check the answer!)

Or we can multiply x by its complex conjugate to get a real number:

# we can get the c.c. directly:

u = x.multiply(x.cc())

# print the real part and the imaginary parts: the latter should be zero.

print(u.r)

print(u.i)

# the square root of the product x * x complex conjugate should the the modulus!

print(x.mod())

print(math.sqrt(u.r))

29.25

0.0

5.408326913195984

5.408326913195984

3.5. Random-Walk Simulation#

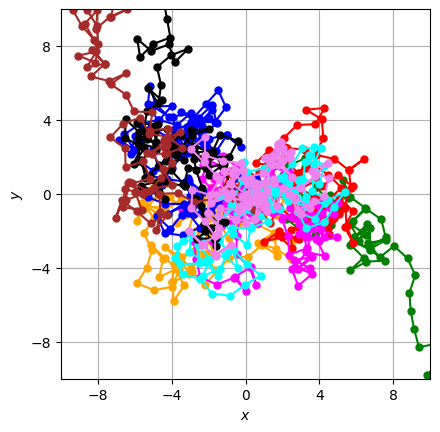

We can now proceed with a simulation of random walks. We will define a class called “walker”, which will allow us to define multiple walkers and make them walk around.

import random

import math

class Walker:

"""A random walker class"""

# instantiations of this class are initialized with an initial position

def __init__(self, initialx, initialy):

# initialize the x and y positions:

self.x = initialx

self.y = initialy

# keep the initial positions:

self.xinit = initialx

self.yinit = initialy

# keep all the positions that the walker has moved through in a list:

self.allx = []

self.ally = []

self.allx.append(initialx)

self.ally.append(initialy)

# now let's create a function that allows us to take random steps with a certain stepsize

def move(self, stepsize):

deltax = (2*random.random()-1) # choose random variables in the range [-1,1]

deltay = (2*random.random()-1)

# normalize to stepsize:

L = math.sqrt(deltax**2 + deltay**2)

deltax = deltax/L

deltay = deltay/L

# add these to the current position

self.x = self.x + deltax

self.y = self.y + deltay

self.allx.append(self.x)

self.ally.append(self.y)

# get the distance from the starting position

def distance(self):

return math.sqrt( (self.x-self.xinit)**2 + (self.y-self.yinit)**2 )

Let’s test this out with a single walker starting from (0,0):

# initialize "Walker1", an instance of the class Walker

Walker1 = Walker(0,0)

# move Walker1 1000 times with stepsize 1:

for i in range(1000):

Walker1.move(1.0)

# get the distance from the origin:

print(Walker1.distance())

44.327009590905234

Our next task is to initialize N (=9 to start with) walkers at the origin and follow their path visually! We are going to use matplotlib and evolve walkers “dynamically” on a graph.

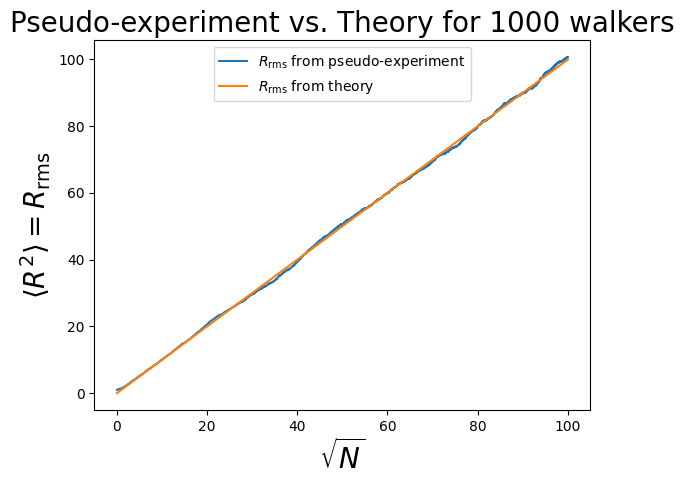

It also will be interesting to investigate whether our theoretical expectations agree with this simulation.

Let’s also calculate, and store, the root-mean-square (rms) distance versus the of number of steps.

import numpy as np

import matplotlib.pyplot as plt

import time # various time functions

from tqdm import tqdm # progress bar

import matplotlib.ticker as ticker #

# this allows us to access dynamic updating of the plot:

from IPython import display

from IPython.display import clear_output

# setup the axis:

fig,ax = plt.subplots(1,1)

#get the dynamic display:

dynamicdisplay = display.display("", display_id=True)

# put a grid on the graph:

ax.grid(True, which='both')

# set the aspect ratio to 1:

ax.set_aspect(1)

# set some parameters here:

n = 9 # the number of walkers

N = 100 # the number of steps

step = 1 # the step size

Nupdate = 1 # the frequency of steps used to update the plot

# set the labels and limits:

ax.set_xlabel(r'$x$')

ax.set_ylabel(r'$y$')

limitsize = math.sqrt(N)*step

ax.set_xlim(-limitsize,limitsize)

ax.set_ylim(-limitsize,limitsize)

# set the major ticks to correspond to twice step size:

ax.xaxis.set_major_locator(ticker.MultipleLocator(4*step))

ax.yaxis.set_major_locator(ticker.MultipleLocator(4*step))

# initialize n walkers and put them in a list:

WalkerList = []

WalkerColor = [] # create a list with the colors of each walker

colors = [ 'green', 'orange', 'red', 'magenta', 'blue', 'cyan', 'black', 'brown', 'violet'] # 9 colours

j = 0 # counter to make sure we don't go over the 9 colors

for w in range(n):

WalkerList.append(Walker(0,0))

# set the color of each walker. If we w exceeds 8 then reset the counter j:

if w-8*j > 8:

j = j + 1

else:

pass

WalkerColor.append(colors[w-8*j])

# now go through all the n walkers and get them to perform N steps

for i in tqdm(range(N)):

# go through all the walkers in the WalkerList

for j, walker in enumerate(WalkerList):

# move them one step in a random direction

walker.move(step)

if i%Nupdate==0: # only update every Nupdate steps

# plot them!

# the list will contain the points so we can remove them later:

walkers_plot = []

for j, walker in enumerate(WalkerList):

walkers_plot.append(ax.plot(walker.allx, walker.ally, marker='o',color=WalkerColor[j], ls='-', markersize=5))

dynamicdisplay.update(fig) # update the figure in the notebook

# remove the walkers from display for next run:

for j, walker in enumerate(WalkerList):

wmarker = walkers_plot[j].pop(0)

wmarker.remove()

time.sleep(0.1) # "sleep" for half a second to allow us to see the evolution

0%| | 0/100 [00:00<?, ?it/s]

1%| | 1/100 [00:00<00:15, 6.42it/s]

2%|▏ | 2/100 [00:00<00:14, 6.58it/s]

3%|▎ | 3/100 [00:00<00:14, 6.62it/s]

4%|▍ | 4/100 [00:00<00:14, 6.67it/s]

5%|▌ | 5/100 [00:00<00:14, 6.66it/s]

6%|▌ | 6/100 [00:00<00:15, 6.16it/s]

7%|▋ | 7/100 [00:01<00:14, 6.30it/s]

8%|▊ | 8/100 [00:01<00:14, 6.40it/s]

9%|▉ | 9/100 [00:01<00:14, 6.49it/s]

10%|█ | 10/100 [00:01<00:13, 6.54it/s]

11%|█ | 11/100 [00:01<00:13, 6.59it/s]

12%|█▏ | 12/100 [00:01<00:13, 6.61it/s]

13%|█▎ | 13/100 [00:01<00:13, 6.62it/s]

14%|█▍ | 14/100 [00:02<00:12, 6.62it/s]

15%|█▌ | 15/100 [00:02<00:12, 6.63it/s]

16%|█▌ | 16/100 [00:02<00:12, 6.61it/s]

17%|█▋ | 17/100 [00:02<00:12, 6.62it/s]

18%|█▊ | 18/100 [00:02<00:12, 6.64it/s]

19%|█▉ | 19/100 [00:02<00:12, 6.66it/s]

20%|██ | 20/100 [00:03<00:12, 6.63it/s]

21%|██ | 21/100 [00:03<00:11, 6.62it/s]

22%|██▏ | 22/100 [00:03<00:11, 6.60it/s]

23%|██▎ | 23/100 [00:03<00:11, 6.56it/s]

24%|██▍ | 24/100 [00:03<00:11, 6.56it/s]

25%|██▌ | 25/100 [00:03<00:11, 6.56it/s]

26%|██▌ | 26/100 [00:03<00:11, 6.54it/s]

27%|██▋ | 27/100 [00:04<00:11, 6.52it/s]

28%|██▊ | 28/100 [00:04<00:11, 6.53it/s]

29%|██▉ | 29/100 [00:04<00:10, 6.53it/s]

30%|███ | 30/100 [00:04<00:10, 6.52it/s]

31%|███ | 31/100 [00:04<00:10, 6.50it/s]

32%|███▏ | 32/100 [00:04<00:10, 6.48it/s]

33%|███▎ | 33/100 [00:05<00:10, 6.48it/s]

34%|███▍ | 34/100 [00:05<00:10, 6.45it/s]

35%|███▌ | 35/100 [00:05<00:10, 6.45it/s]

36%|███▌ | 36/100 [00:05<00:09, 6.41it/s]

37%|███▋ | 37/100 [00:05<00:09, 6.38it/s]

38%|███▊ | 38/100 [00:05<00:09, 6.38it/s]

39%|███▉ | 39/100 [00:05<00:09, 6.40it/s]

40%|████ | 40/100 [00:06<00:09, 6.40it/s]

41%|████ | 41/100 [00:06<00:09, 6.40it/s]

42%|████▏ | 42/100 [00:06<00:09, 6.41it/s]

43%|████▎ | 43/100 [00:06<00:08, 6.41it/s]

44%|████▍ | 44/100 [00:06<00:08, 6.42it/s]

45%|████▌ | 45/100 [00:06<00:08, 6.41it/s]

46%|████▌ | 46/100 [00:07<00:08, 6.42it/s]

47%|████▋ | 47/100 [00:07<00:08, 6.41it/s]

48%|████▊ | 48/100 [00:07<00:08, 6.39it/s]

49%|████▉ | 49/100 [00:07<00:08, 6.36it/s]

50%|█████ | 50/100 [00:07<00:07, 6.36it/s]

51%|█████ | 51/100 [00:07<00:07, 6.37it/s]

52%|█████▏ | 52/100 [00:08<00:07, 6.35it/s]

53%|█████▎ | 53/100 [00:08<00:07, 6.36it/s]

54%|█████▍ | 54/100 [00:08<00:07, 6.36it/s]

55%|█████▌ | 55/100 [00:08<00:07, 6.35it/s]

56%|█████▌ | 56/100 [00:08<00:06, 6.33it/s]

57%|█████▋ | 57/100 [00:08<00:06, 6.35it/s]

58%|█████▊ | 58/100 [00:08<00:06, 6.34it/s]

59%|█████▉ | 59/100 [00:09<00:06, 6.32it/s]

60%|██████ | 60/100 [00:09<00:06, 6.32it/s]

61%|██████ | 61/100 [00:09<00:06, 6.31it/s]

62%|██████▏ | 62/100 [00:09<00:06, 6.31it/s]

63%|██████▎ | 63/100 [00:09<00:05, 6.30it/s]

64%|██████▍ | 64/100 [00:09<00:05, 6.31it/s]

65%|██████▌ | 65/100 [00:10<00:05, 6.30it/s]

66%|██████▌ | 66/100 [00:10<00:05, 6.30it/s]

67%|██████▋ | 67/100 [00:10<00:05, 6.29it/s]

68%|██████▊ | 68/100 [00:10<00:05, 6.27it/s]

69%|██████▉ | 69/100 [00:10<00:04, 6.24it/s]

70%|███████ | 70/100 [00:10<00:04, 6.19it/s]

71%|███████ | 71/100 [00:11<00:04, 6.07it/s]

72%|███████▏ | 72/100 [00:11<00:04, 6.12it/s]

73%|███████▎ | 73/100 [00:11<00:04, 6.15it/s]

74%|███████▍ | 74/100 [00:11<00:04, 6.16it/s]

75%|███████▌ | 75/100 [00:11<00:04, 6.18it/s]

76%|███████▌ | 76/100 [00:11<00:03, 6.20it/s]

77%|███████▋ | 77/100 [00:12<00:03, 6.21it/s]

78%|███████▊ | 78/100 [00:12<00:03, 6.20it/s]

79%|███████▉ | 79/100 [00:12<00:03, 6.22it/s]

80%|████████ | 80/100 [00:12<00:03, 6.23it/s]

81%|████████ | 81/100 [00:12<00:03, 6.21it/s]

82%|████████▏ | 82/100 [00:12<00:03, 5.73it/s]

83%|████████▎ | 83/100 [00:13<00:02, 5.87it/s]

84%|████████▍ | 84/100 [00:13<00:02, 5.98it/s]

85%|████████▌ | 85/100 [00:13<00:02, 6.03it/s]

86%|████████▌ | 86/100 [00:13<00:02, 6.08it/s]

87%|████████▋ | 87/100 [00:13<00:02, 6.12it/s]

88%|████████▊ | 88/100 [00:13<00:01, 6.13it/s]

89%|████████▉ | 89/100 [00:13<00:01, 6.15it/s]

90%|█████████ | 90/100 [00:14<00:01, 6.16it/s]

91%|█████████ | 91/100 [00:14<00:01, 6.19it/s]

92%|█████████▏| 92/100 [00:14<00:01, 6.18it/s]

93%|█████████▎| 93/100 [00:14<00:01, 6.19it/s]

94%|█████████▍| 94/100 [00:14<00:00, 6.17it/s]

95%|█████████▌| 95/100 [00:14<00:00, 6.16it/s]

96%|█████████▌| 96/100 [00:15<00:00, 6.17it/s]

97%|█████████▋| 97/100 [00:15<00:00, 6.18it/s]

98%|█████████▊| 98/100 [00:15<00:00, 6.18it/s]

99%|█████████▉| 99/100 [00:15<00:00, 6.16it/s]

100%|██████████| 100/100 [00:15<00:00, 6.15it/s]

100%|██████████| 100/100 [00:15<00:00, 6.34it/s]

Let’s plot the RMS distance versus the square root of N! First, let’s increase the number of walkers and the number of steps, without plotting:

import numpy as np

from tqdm import tqdm # progress bar

# set some parameters here:

n = 1000 # the number of walkers

N = 10000 # the number of steps

step = 1 # the step size

Nupdate = 1 # the frequency of steps used to update the plot

# initialize n walkers and put them in a list:

WalkerList = []

for w in range(n):

WalkerList.append(Walker(0,0))

# list to store R squared average (i.e. the rms) *over all the walkers* after N steps:

R_rms_list = []

# now go through all the n walkers and get them to perform N steps

for i in tqdm(range(N)):

Rsq_av = 0 # variable used to calculate the square average

# go through all the walkers in the WalkerList

for j, walker in enumerate(WalkerList):

# move them one step in a random direction

walker.move(step)

# add to the average:

Rsq_av = Rsq_av + walker.distance()**2

# get the average and push it into the list:

R_rms_list.append(math.sqrt(Rsq_av/n)) # divide by the number of walkers to get the average

0%| | 0/10000 [00:00<?, ?it/s]

1%| | 107/10000 [00:00<00:09, 1065.43it/s]

2%|▏ | 214/10000 [00:00<00:09, 1047.93it/s]

3%|▎ | 319/10000 [00:00<00:09, 1039.78it/s]

4%|▍ | 423/10000 [00:00<00:09, 1033.36it/s]

5%|▌ | 527/10000 [00:00<00:09, 1033.17it/s]

6%|▋ | 631/10000 [00:00<00:09, 1034.67it/s]

7%|▋ | 735/10000 [00:00<00:08, 1033.32it/s]

8%|▊ | 839/10000 [00:00<00:08, 1033.12it/s]

9%|▉ | 943/10000 [00:00<00:08, 1031.79it/s]

10%|█ | 1047/10000 [00:01<00:08, 1029.07it/s]

12%|█▏ | 1150/10000 [00:01<00:08, 1027.30it/s]

13%|█▎ | 1253/10000 [00:01<00:08, 1022.85it/s]

14%|█▎ | 1357/10000 [00:01<00:08, 1027.27it/s]

15%|█▍ | 1460/10000 [00:01<00:08, 1020.83it/s]

16%|█▌ | 1564/10000 [00:01<00:08, 1025.52it/s]

17%|█▋ | 1667/10000 [00:01<00:08, 1018.72it/s]

18%|█▊ | 1773/10000 [00:01<00:07, 1028.70it/s]

19%|█▉ | 1876/10000 [00:01<00:07, 1024.47it/s]

20%|█▉ | 1981/10000 [00:01<00:07, 1031.39it/s]

21%|██ | 2085/10000 [00:02<00:07, 1019.93it/s]

22%|██▏ | 2191/10000 [00:02<00:07, 1029.95it/s]

23%|██▎ | 2295/10000 [00:02<00:07, 1020.26it/s]

24%|██▍ | 2400/10000 [00:02<00:07, 1027.92it/s]

25%|██▌ | 2506/10000 [00:02<00:07, 1034.63it/s]

26%|██▌ | 2610/10000 [00:02<00:07, 1021.63it/s]

27%|██▋ | 2715/10000 [00:02<00:07, 1028.78it/s]

28%|██▊ | 2820/10000 [00:02<00:06, 1034.99it/s]

29%|██▉ | 2924/10000 [00:02<00:06, 1020.51it/s]

30%|███ | 3029/10000 [00:02<00:06, 1028.38it/s]

31%|███▏ | 3134/10000 [00:03<00:06, 1033.72it/s]

32%|███▏ | 3239/10000 [00:03<00:06, 1038.00it/s]

33%|███▎ | 3343/10000 [00:03<00:06, 1020.59it/s]

34%|███▍ | 3446/10000 [00:03<00:06, 1010.52it/s]

35%|███▌ | 3548/10000 [00:03<00:06, 1009.84it/s]

37%|███▋ | 3653/10000 [00:03<00:06, 1020.99it/s]

38%|███▊ | 3756/10000 [00:03<00:06, 1005.63it/s]

39%|███▊ | 3861/10000 [00:03<00:06, 1017.53it/s]

40%|███▉ | 3966/10000 [00:03<00:05, 1026.60it/s]

41%|████ | 4071/10000 [00:03<00:05, 1033.25it/s]

42%|████▏ | 4175/10000 [00:04<00:05, 1009.10it/s]

43%|████▎ | 4280/10000 [00:04<00:05, 1019.38it/s]

44%|████▍ | 4385/10000 [00:04<00:05, 1026.47it/s]

45%|████▍ | 4488/10000 [00:04<00:05, 1015.87it/s]

46%|████▌ | 4590/10000 [00:04<00:05, 966.34it/s]

47%|████▋ | 4688/10000 [00:04<00:05, 936.76it/s]

48%|████▊ | 4785/10000 [00:04<00:05, 945.76it/s]

49%|████▉ | 4882/10000 [00:04<00:05, 952.66it/s]

50%|████▉ | 4987/10000 [00:04<00:05, 979.86it/s]

51%|█████ | 5092/10000 [00:05<00:04, 998.31it/s]

52%|█████▏ | 5197/10000 [00:05<00:04, 1010.83it/s]

53%|█████▎ | 5299/10000 [00:05<00:04, 981.58it/s]

54%|█████▍ | 5399/10000 [00:05<00:04, 983.46it/s]

55%|█████▍ | 5499/10000 [00:05<00:04, 985.64it/s]

56%|█████▌ | 5604/10000 [00:05<00:04, 1003.54it/s]

57%|█████▋ | 5705/10000 [00:05<00:04, 1004.03it/s]

58%|█████▊ | 5806/10000 [00:05<00:04, 1001.28it/s]

59%|█████▉ | 5907/10000 [00:05<00:04, 966.54it/s]

60%|██████ | 6010/10000 [00:05<00:04, 983.34it/s]

61%|██████ | 6115/10000 [00:06<00:03, 1001.96it/s]

62%|██████▏ | 6220/10000 [00:06<00:03, 1015.21it/s]

63%|██████▎ | 6325/10000 [00:06<00:03, 1024.96it/s]

64%|██████▍ | 6430/10000 [00:06<00:03, 1031.33it/s]

65%|██████▌ | 6535/10000 [00:06<00:03, 1036.63it/s]

66%|██████▋ | 6639/10000 [00:06<00:03, 1001.51it/s]

67%|██████▋ | 6744/10000 [00:06<00:03, 1013.85it/s]

68%|██████▊ | 6849/10000 [00:06<00:03, 1022.08it/s]

70%|██████▉ | 6953/10000 [00:06<00:02, 1025.17it/s]

71%|███████ | 7057/10000 [00:06<00:02, 1028.29it/s]

72%|███████▏ | 7160/10000 [00:07<00:02, 1026.10it/s]

73%|███████▎ | 7264/10000 [00:07<00:02, 1028.27it/s]

74%|███████▎ | 7367/10000 [00:07<00:02, 1028.24it/s]

75%|███████▍ | 7470/10000 [00:07<00:02, 987.80it/s]

76%|███████▌ | 7575/10000 [00:07<00:02, 1003.80it/s]

77%|███████▋ | 7679/10000 [00:07<00:02, 1012.76it/s]

78%|███████▊ | 7783/10000 [00:07<00:02, 1018.87it/s]

79%|███████▉ | 7886/10000 [00:07<00:02, 1021.27it/s]

80%|███████▉ | 7989/10000 [00:07<00:01, 1023.72it/s]

81%|████████ | 8092/10000 [00:07<00:01, 1024.89it/s]

82%|████████▏ | 8195/10000 [00:08<00:01, 1022.74it/s]

83%|████████▎ | 8299/10000 [00:08<00:01, 1025.70it/s]

84%|████████▍ | 8402/10000 [00:08<00:01, 979.66it/s]

85%|████████▌ | 8506/10000 [00:08<00:01, 996.14it/s]

86%|████████▌ | 8610/10000 [00:08<00:01, 1008.51it/s]

87%|████████▋ | 8715/10000 [00:08<00:01, 1018.24it/s]

88%|████████▊ | 8820/10000 [00:08<00:01, 1025.76it/s]

89%|████████▉ | 8925/10000 [00:08<00:01, 1030.87it/s]

90%|█████████ | 9030/10000 [00:08<00:00, 1034.94it/s]

91%|█████████▏| 9135/10000 [00:08<00:00, 1038.30it/s]

92%|█████████▏| 9240/10000 [00:09<00:00, 1039.67it/s]

93%|█████████▎| 9345/10000 [00:09<00:00, 1038.63it/s]

94%|█████████▍| 9449/10000 [00:09<00:00, 1036.22it/s]

96%|█████████▌| 9553/10000 [00:09<00:00, 984.23it/s]

97%|█████████▋| 9657/10000 [00:09<00:00, 1000.10it/s]

98%|█████████▊| 9762/10000 [00:09<00:00, 1012.35it/s]

99%|█████████▊| 9867/10000 [00:09<00:00, 1021.11it/s]

100%|█████████▉| 9972/10000 [00:09<00:00, 1027.82it/s]

100%|██████████| 10000/10000 [00:09<00:00, 1015.72it/s]

import matplotlib.pyplot as plt

import math

# first, create a list for square root(N):

sqrtN = [math.sqrt(x) for x in range(N)]

# the theoretical expectation is R_rms = sqrt(N) * r_rms (where r_rms = step in this case):

R_rms_theory = [math.sqrt(y)*step for y in range(N)]

# setup the axis:

fig,ax = plt.subplots(1,1)

# set the labels and titles:

ax.set_xlabel(r'$\sqrt{N}$', fontsize=20) # set the x label

ax.set_ylabel(r'$\left<R^2\right> = R_\mathrm{rms}$', fontsize=20) # set the y label. Note that the 'r' is necessary to remove the need for double slashes. You can use LaTeX!

ax.set_title('Pseudo-experiment vs. Theory for ' + str(n) + ' walkers' , fontsize=20) # set the title

# plot the pseudo-experiment result

ax.plot(sqrtN, R_rms_list, ls='-', label=r'$R_\mathrm{rms}$ from pseudo-experiment')

# plot the theoretical expectation

ax.plot(sqrtN, R_rms_theory, ls='-', label=r'$R_\mathrm{rms}$ from theory')

# construct the legend:

ax.legend(loc='upper center') # Add a legend

plt.show() # show the plot here

E.g. for n = 1000 walkers and N = 10000 steps, the agreement is excellent!